Subsections of Ansible

Ad Hoc Ansible Commands

Ad hoc commands are ansible tasks you can run against managed hosts without the need of a playbook or script. These are used for bringing nodes to their desired states, verifying playbook results, and verifying nodes meet any needed criteria/pre-requisites. These must be ran as the ansible user (whatever your remote_user directive is set to under [defaults] in ansible.cfg)

Run the user module with the argument name=lisa on all hosts to make sure the user “lisa” exists. If the user doesn’t exist, it will be created on the remote system:

ansible all -m user -a "name=lisa"

{command} {host} -m {module} -a {"argument1 argument2 argument3"}

In our lab:

ansible all -m user -a "name=lisa"This Ad Hoc command created user “Lisa” on ansible1 and ansible2. If we run the command again, we get “SUCCESS” on the first line instead of “CHANGED”. Which means the hosts already meet the requirements:

[ansible@control base]$ ansible all -m user -a "name=lisa"indempotent Regardless of current condition, the host is brought to the desired state. Even if you run the command multiple times.

Run the command id lisa on all managed hosts:

[ansible@control base]$ ansible all -m command -a "id lisa"Here, the command module is used to run a command on the specified hosts. And the output is displayed on screen. To note, this does not show up in our ansible user’s command history on the host:

[ansible@ansible1 ~]$ historyRemove the user lisa from all managed hosts:

[ansible@control base]$ ansible all -m user -a "name=lisa state=absent"You can also use the -u option to specify the Ansible user that Ansible will use to run the command. Remember, with no modules specified, ansible uses the command module:

ansible all -a "free -m" -u david

`

Ad hoc commands in Scripts

Follow normal bash scripting guidelines to run ansible commands in a script:

[ansible@control base]$ vim httpd-ansible.shLet’s set up a script that installs and starts/enables httpd, creates a user called “anna”, and copies the ansible control node’s /etc/hosts file to /tmp/ on the managed nodes:

#!/bin/bash

ansible all -m yum -a "name=httpd state=latest"

ansible all -m service -a "name=httpd state=started enabled=yes"

ansible all -m user -a "name=anna"

ansible all -m copy -a "src=/etc/hosts dest=/tmp/hosts"[ansible@control base]$ chmod +x httpd-ansible.sh

[ansible@control base]$ ./httpd-ansible.sh

web2 | UNREACHABLE! => {

"changed": false,

"msg": "Failed to connect to the host via ssh: ssh: Could not resolve hostname web2: Name or service not known",

"unreachable": true

}

web1 | UNREACHABLE! => {

"changed": false,

"msg": "Failed to connect to the host via ssh: ssh: Could not resolve hostname web1: Name or service not known",

"unreachable": true

}

ansible1 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python3"

},

"changed": false,

"msg": "Nothing to do",

"rc": 0,

"results": []

}

ansible2 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python3"

},

"changed": false,

"msg": "Nothing to do",

"rc": 0,

"results": []

}

... <-- Results truncatedAnd from the ansible1 node we can verify:

[ansible@ansible1 ~]$ cat /etc/passwd | grep anna

anna:x:1001:1001::/home/anna:/bin/bash[ansible@ansible1 ~]$ cat /tmp/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.124.201 ansible1

192.168.124.202 ansible2View a file from a managed node:

ansible ansible1 -a "cat /somfile.txt"

Ansible Documentation

ansible-navigator

Was advised to start using this tools for Ansible because it is available during the RHCE exam. https://ansible.readthedocs.io/projects/navigator/

Ansible Docs

ansible-doc

https://docs.ansible.com/ansible/latest/cli/ansible-doc.html

Ansible Facts

An Ansible fact is a variable that contains information about a target system.This information can be used in conditional statements to tailor playbooks to that system. Systems facts are system property values. Custom facts are user-defined variables stored on managed hosts. system.

Facts are collected when Ansible executes on the remote system. You’ll see a “Gathering Facts” task everytime you run a playbook. These facts are then stored in the variable ansible_facts.

Use the debug module to check the value of variables. This module requires variables to be enclosed in curly brackets. This example shows a large list of facts from managed nodes:

---

- name: show facts

hosts: all

tasks:

- name: show facts

debug:

var: ansible_factsThere are two supported formats for using Ansible fact variables:

It’s recommended to use square brackets: ansible_facts['default_ipv4']['address'] but dotted notation is also supported for now: ansible_facts.default_ipv4.address

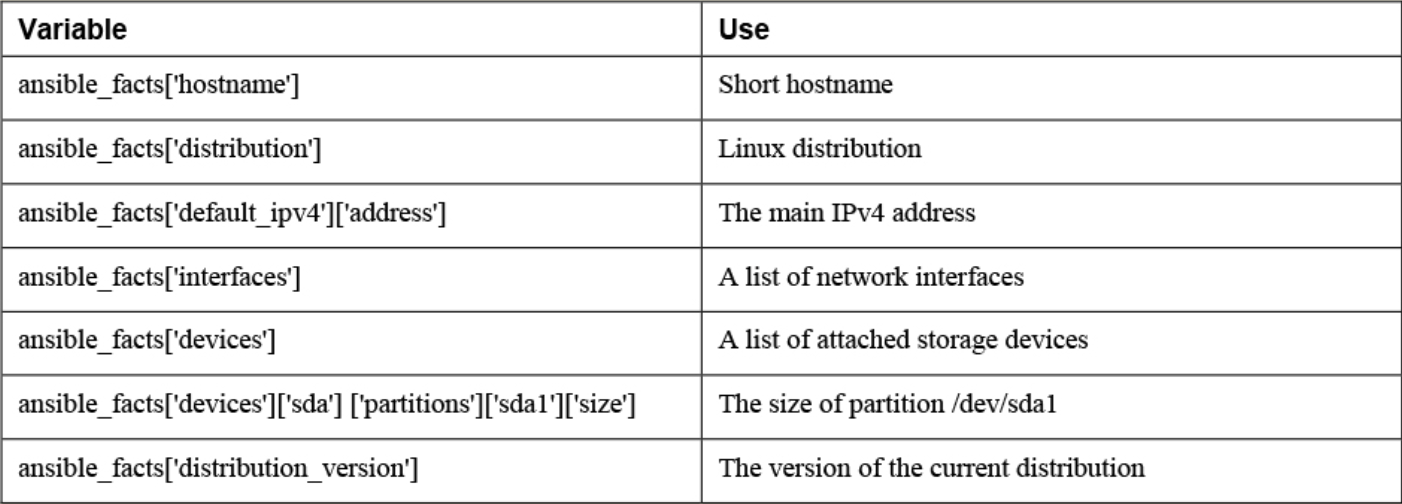

Commonly used ansible_facts:

There are additional Ansible modules for gathering more information. See `ansible-doc -l | grep fact

package_facts module collects information about software packages installed on managed hosts.

Two ways facts are displayed

Ansible_facts variable (current way)

- All facts are stored in a dictionary with the name ansible_facts, and items in this dictionary are addressed using the notation with square brackets

- ie:

ansible_facts['distribution_version'] - Recommended to use this.

injected variables (old way)

-

Variable are prefixed with the string ansible_

-

Will lose support eventually

-

Old approach and the new approach both still occur.

ansible ansible1 -m setupcommand Ansible facts are injected as variables.

ansible1 | SUCCESS => {

"ansible_facts": {

"ansible_all_ipv4_addresses": [

"192.168.122.1",

"192.168.4.201"

],

"ansible_all_ipv6_addresses": [

"fe80::e564:5033:5dec:aead"

],

"ansible_apparmor": {Comparing ansible_facts Versus Injected Facts as Variables

ansible_facts Injected Variable

--------------------------------------------------------------

ansible_facts['hostname'] ansible_hostname

ansible_facts['distribution'] ansible_distribution

ansible_facts['default_ipv4']['address'] ansible_default_ipv4['address']

ansible_facts['interfaces'] ansible_interfaces

ansible_facts['devices'] ansible_devices

ansible_facts['devices']['sda']\

['partitions']['sda1']['size'] ansible_devices['sda']['partitions']['sda1']['size']

ansible_facts['distribution_version'] ansible_distribution_versionDifferent notations can be used in either method, the listings address the facts in dotted notation, not in the notation with square brackets.

Addressing Facts with Injected Variables:

- hosts: all

tasks:

- name: show IP address

debug:

msg: >

This host uses IP address {{ ansible_default_ipv4.address }}Addressing Facts Using the ansible_facts Variable

---

- hosts: all

tasks:

- name: show IP address

debug:

msg: >

This host uses IP address {{ ansible_facts.default_ipv4.address }}If, for some reason, you want the method where facts are injected into variables to be the default method, you can use inject_facts_as_vars=true in the [default] section of the ansible.cfg file.

• In Ansible versions since 2.5, all facts are stored in one variable: ansible_facts. This method is used while gathering facts from a playbook.

• Before Ansible version 2.5, facts were injected into variables such as ansible_hostname. This method is used by the setup module. (Note that this may change in future versions of Ansible.)

• Facts can be addressed in dotted notation:

{{ansible_facts.default_ipv4.address }}

• Alternatively, facts can be addressed in square brackets notation:

{{ ansible_facts['default_ipv4']['address'] }}. (preferred)

Managing Fact Gathering

By default, upon execution of each playbook, facts are gathered. This does slow down playbooks, and for that reason, it is possible to disable fact gathering completely. To do so, you can use the gather_facts: no parameter in the play header. If later in the same playbook it is necessary to gather facts, you can do this by running the setup module in a task.

Even if it is possible to disable fact gathering for all of your Ansible configuration, this practice is not recommended. Too many playbooks use conditionals that are based on the current value of facts, and all of these conditionals would stop working if fact gathering were disabled altogether.

As an alternative to make working with facts more efficient, you can disable a fact cache. To do so, you need to install an external plug-in. Currently, two plug-ins are available for this purpose: jsonfile and redis. To configure fact caching using the redis plug-in, you need to install it first. Next, you can enable fact caching through ansible.cfg.

The following procedure describes how to do this:

1. Use yum install redis.

2. Use service redis start.

3. Use pip install redis.

4. Edit /etc/ansible/ansible.cfg and ensure it contains the following parameters:

[defaults]

gathering = smart

fact_caching = redis

fact_caching_timeout = 86400Note

Fact caching can be convenient but should be used with caution. If, for instance, a playbook installs a certain package only if a sufficient amount of disk space is available, it should not do this based on information that may be up to 24 hours old. For that reason, using a fact cache is not recommended in many situations.

Custom Facts

-

Used to provide a host with arbitrary values that Ansible can use to change the behavior of plays.

-

can be provided as static files.

-

files must

- be in either INI or JSON format,

- have the extension .fact, and

- on the managed hosts must be stored in the /etc/ansible/facts.d directory.

-

can be generated by a script, and

- in that case the only requirement is that the script must generate its output in JSON format.

Dynamic custom facts are useful because they allow the facts to be determined at the moment that a script is running. provides an example of a static custom fact file.

Custom Facts Sample File:

[packages]

web_package = httpd

ftp_package = vsftpd

[services]

web_service = httpd

ftp_service = vsftpdTo get the custom facts files on the managed hosts, you can use a playbook that copies a local custom fact file (existing in the current Ansible project directory) to the appropriate location on the managed hosts. Notice that this playbook uses variables, which are explained in more detail in the section titled “Working with Variables.”

---

- name: Install custom facts

hosts: all

vars:

remote_dir: /etc/ansible/facts.d

facts_file: listing68.fact

tasks:

- name: create remote directory

file:

state: directory

recurse: yes

path: "{{ remote_dir }}"

- name: install new facts

copy:

src: "{{ facts_file }}"

dest: "{{ remote_dir }}"Custom facts are stored in the variable ansible_facts.ansible_local. In this variable, you use the filename of the custom fact file and the label in the custom fact file. For instance, after you run the playbook in Listing 6-9, the web_package fact that was defined in listing68.fact is accessible as

{{ ansible_facts[’ansible_local’][’listing67’][’packages’][’web_package’] }}

To verify, you can use the setup module with the filter argument. Notice that because the setup module produces injected variables as a result, the ad hoc command to use is ansible all -m setup -a "filter=ansible_local" . The command ansible all -m setup -a "filter=ansible_facts\['ansible_local'\]" does not work.

Lab Working with Ansible Facts

1. Create a custom fact file with the name custom.fact and the following contents:

[software]

package = httpd

service = httpd

state = started

enabled = true2. Write a playbook with the name copy_facts.yaml and the following contents:

---

- name: copy custom facts

become: yes

hosts: ansible1

tasks:

- name: create the custom facts directory

file:

state: directory

recurse: yes

path: /etc/ansible/facts.d

- name: copy the custom facts

copy:

src: custom.fact

dest: /etc/ansible/facts.d3. Apply the playbook using ansible-playbook copy_facts.yaml -i inventory

4. Check the availability of the custom facts by using ansible all -m setup -a "filter=ansible_local" -i inventory

5. Use an ad hoc command to ensure that the httpd service is not installed on any of the managed servers: ansible all -m yum -a "name=httpd state=absent" -i inventory -b

6. Create a playbook with the name setup_with_facts.yaml that installs and enables the httpd service, using the custom facts:

---

- name: install and start the web service

hosts: ansible1

tasks:

- name: install the package

yum:

name: "{{ ansible_facts['ansible_local']['custom']['software']['package'] }}"

state: latest

- name: start the service

service:

name: "{{ ansible_facts['ansible_local']['custom']['software']['service'] }}"

state: "{{ ansible_facts['ansible_local']['custom']['software']['state'] }}"

enabled: "{{ ansible_facts['ansible_local']['custom']['software']['enabled'] }}"7. Run the playbook to install and set up the service by using ansible-playbook setup_with_facts.yaml -i inventory -b

8. Use an ad hoc command to verify the service is running: ansible ansible1 -a "systemctl status httpd" -i inventory -b

Ansible Galaxy Roles

Using Ansible Galaxy Roles

- Ansible Galaxy is a public library of Ansible content and contains thousands of roles that have been provided by community members.

Working with Galaxy

The easiest way to work with Ansible Galaxy is to use the website at https://galaxy.ansible.com:

-

Use the Search Feature to Search for Specific Packages

-

In the result of any Search action, you see a list of collections as well as a list of roles.

-

An Ansible Galaxy collection is a distribution format for Ansible content.

-

It can contain roles, but also playbooks, modules, and plug-ins.

-

In most cases you just need the roles, not the collection: roles contain all that you include in the playbooks you’re working with.

-

Some important indicators are the number of times the role has been downloaded and the score of the role.

-

This information enables you to easily distinguish between commonly used roles and roles that are not used that often.

-

Also, you can use tags to make identifying Galaxy roles easier.

-

These tags provide more information about a role and make it possible to search for roles in a more efficient way.

- You can download roles directly from the Ansible Galaxy website

- You can also use the

ansible-galaxycommand

Using the ansible-galaxy Command

ansible-galaxy search

- Find roles based on many different keywords and manage them.

- Must provide a string as an argument.

- Ansible searches for this string in the name and description of the roles.

Useful Command-Line Options: –platforms

- Operating system platform to search for –author

- GitHub username of the author –galaxy-tags

- Additional tags to filter by

`ansible-galaxy info

- Get more information about a role.

[ansible@control ansible-lab]$ ansible-galaxy info geerlingguy.docker

Role: geerlingguy.docker

description: Docker for Linux.

commit: 9115e969c1e57a1639160d9af3477f09734c94ac

commit_message: Merge pull request #501 from adamus1red/adamus1red/alpine-compose

add compose package to Alpine specific variables

created: 2023-05-08T20:49:45.679874Z

download_count: 23592264

github_branch: master

github_repo: ansible-role-docker

github_user: geerlingguy

id: 10923

imported: 2025-03-24T00:01:45.901567

modified: 2025-03-24T00:01:47.840887Z

path: ('/home/ansible/.ansible/roles', '/usr/share/ansible/roles', '/etc/ansible/roles')

upstream_id: None

username: geerlingguyManaging Ansible Galaxy Roles

ansible-galaxy install

- Install a role

- normally installs the role into the ~/.ansible/roles directory because this role is specified in the role_path setting in ansible.cfg.

- If you want roles to be installed in another directory, consider changing this parameter.

-p - option to install the role to a different role path directory.

requirements file.

- YAML file that you can include when using the

ansible-rolescommand.

- src: geerlingguy.nginx

version: "2.7.0"- possible to add roles from sources other than Ansible Galaxy, such as a Git repository or a tarball.

- In that case, you must specify the exact URL to the role using the

srcoption. - When you are installing roles from a Git repository, the

scmkeyword is also required and must be set togit.

To install a role using the requirements file, you can use the -r option with the ansible-galaxy install command:

ansible-galaxy install -r roles/requirements.yml

ansible-galaxy list

- Get a list of currently installed roles

ansible-galaxy remove

- Remove roles from your system.

LAB: Using ansible-galaxy to Manage Roles

- Type

ansible-galaxy search --author geerlingguy --platforms ELto see a list of roles that geerlingguy has created. - Make the command more specific and type

ansible-galaxy search nginx --author geerlingguy --platforms ELto find the geerlingguy.nginx role. - Request more information about this role by using

ansible-galaxy info geerlingguy.nginx. - Create a requirements file with the name listing96.yaml and give this file the following contents:

- src: geerlingguy.nginx

version: "2.7.0"-

Add the line

roles_path = /home/ansible/rolesto the ansible.cfg file. -

Use the command

ansible-galaxy install -r listing96.yamlto install the role from the requirements file. It is possible that by the time you run this exercise, the specified version 2.7.0 is no longer available. If that is the case, useansible-galaxy infoagain to find a version that still is available, and change the requirements file accordingly. -

Type

ansible-galaxy listto verify that the new role was successfully installed on your system. -

Write a playbook with the name exercise92.yaml that uses the role and has the following contents:

---

- name: install nginx using Galaxy role

hosts: ansible2

roles:

- geerlingguy.nginx- Run the playbook using

ansible-playbook exercise92.yamland observe that the new role is installed from the custom roles path.

Ansible Inventory and Ansible.cfg

Ansible projects

For small companies, you can use a single Ansible configuration. But for larger ones, it’s a good idea to use different project directories. A project directory contains everything you need to work on a single project. Including:

- playbooks

- variable files

- task files

- inventory files

- ansible.cfg

playbook An Ansible script written in YAML that enforce the desired configuration on manage hosts.

Inventory

A file that Identifies hosts that Ansible has to manage. You can also use this to list and group hosts and specify host variables. Each project should have it’s own inventory file.

/etc/ansible/hosts

- can be used for system wide inventory.

- default if no inventory file is specified.

- has some basic inventory formatting info if you forget)

- Ansible will also target localhosts if no hosts are found in the inventory file.

- It’s a good idea to store inventory files in large environments in their own project folders.

localhost is not defined in inventory. It is an implicit host that is usable and refers to the Ansible control machine. Using localhost can be a good way to verify the accessibility of services on managed hosts.

Listing hosts

List hosts by IP address or hostname. You can list a range of hosts in an inventory file as well such as web-server[1:10].example.com

ansible1:2222 < specify ssh port if the host is not using the default port 22

ansible2

10.0.10.55

web-server[1:10].example.comListing groups

You can list groups and groups of groups. See the groups web and db are included in the group “servers:children”

ansible1

ansible2

10.0.10.55

web-server[1:10].example.com

[web]

web-server[1:10].example.com

[db]

db1

db2

[servers:children] <-- servers is the group of groups and children is the parameter that specifies child groups

web

dbThere are 3 general approaches to using groups:

Functional groups Address a specific group of hosts according to use. Such as web servers or database servers.

Regional host groups Used when working with region oriented infrastructure. Such as USA, Canada.

Staging host groups Used to address different hosts according to the staging phase that the current environment is in. Such as testing, development, production.

Undefined host groups are called implicit host groups. These are all, ungrouped, and localhost. Names making the meaning obvious.

Host variables

In older versions of Ansible you could define variables for hosts. This is no longer used. Example:

[groupname:vars]

ansible=ansible_userVariables are now set using host_vars and group_vars directories instead.

Multiple inventory files

Put all inventory files in a directory and specify the directory as the inventory to be used. For dynamic directories you also need to set the execution bit on the inventory file.

Ansible Playbooks

- Exploring playbooks

- YAML

- Managing Multiplay Playbooks

Lets create our first playbook:

[ansible@control base]$ vim playbook.yaml

---

- name: install start and enable httpd <-- play is at the highest level

hosts: all

tasks: <-- play has a list of tasks

- name: install package <-- name of task 1

yum: <-- module

name: httpd <-- argument 1

state: installed <-- argument 2

- name: start and enable service <-- task 2

service:

name: httpd

state: started

enabled: yesThere are thee dashes at the top of the playbook. And sometimes you’ll find three dots at the end of a playbook. These make it easy to isolate the playbook and embed the playbook code into other projects.

Playbooks are written in YAML format and saved as either .yml or .yaml. YAML specifies objects as key-value pairs (dictionaries). Key value pairs can be listed in either key: value (preferred) or key=value. And dashes specify lists of embedded objects.

There is a collection of one or more plays in a playbook. Each play targets specific hosts and lists tasks to perform on those hosts. There is one play here with the name “install start and enable httpd”. You target the host names to target at the top of the play, not in the individual tasks performed.

Each task is identified by “- name” (not required but recommended for troubleshooting and identifying tasks). Then the module is listed with arguments and their values under that.

Indentation is important here. It identifies the relationships between different elements. Data elements at the same level must have the same indentation. And items that are children or properties of another element must be indented more than their parent elements.

Indentation is created using spaces. Usually two spaces is used, but not required. You cannot use tabs for indentation.

You can also edit your .vimrc file to help with indentation when it detects that you are working with a YAML file:

vim ~/.vimrc

autocmd FileType yaml setlocal ai ts=2 sw=2 etRequired elements:

- hosts - name of host(s) to perform play on

- name - name of the play

- tasks - one or more tasks to execute for this play

To run a playbook:

[ansible@control base]$ ansible-playbook playbook.yaml

# Name of the play

PLAY [install start and enable http+userd] ***********************************************

# Overview of tasks and the hosts it was successful on

TASK [Gathering Facts] **************************************************************

fatal: [web1]: UNREACHABLE! => {"changed": false, "msg": "Failed to connect to the host via ssh: ssh: Could not resolve hostname web1: Name or service not known", "unreachable": true}

fatal: [web2]: UNREACHABLE! => {"changed": false, "msg": "Failed to connect to the host via ssh: ssh: Could not resolve hostname web2: Name or service not known", "unreachable": true}

ok: [ansible1]

ok: [ansible2]

TASK [install package] **************************************************************

ok: [ansible1]

ok: [ansible2]

TASK [start and enable service] *****************************************************

ok: [ansible2]

ok: [ansible1]

# overview of the status of each task

PLAY RECAP **************************************************************************

ansible1 : ok=3 (no changes required) changed=0 (indicates the task was successful and target node was modified.) unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

ansible2 : ok=3 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

web1 : ok=0 changed=0 unreachable=1 failed=0 skipped=0 rescued=0 ignored=0

web2 : ok=0 changed=0 unreachable=1 failed=0 skipped=0 rescued=0 ignored=0 Before running tasks, the ansible-playbook command gathers facts (current configuration and settings) about managed nodes.

How to undo playbook modifications

Ansible does not have a built in feature to undo a playbook that you ran. So to undo changes, you need to make another playbook that defines the new desired state of the host.

Working with YAML

Key value pairs can also be listed as:

tasks:

- name: install vsftpd

yum: name=vsftpd

- name: enable vsftpd

service: name=vsftpd enabled=true

- name: create readme fileBut better to list them as such for better readability:

copy:

content: "welcome to the FTP server\n"

dest: /var/ftp/pub/README

force: no

mode: 0444Some modules support multiple values for a single key:

---

- name: install multiple packages

hosts: all

tasks:

- name: install packages

yum:

name: <-- key with multiple values

- nmap

- httpd

- vsftpd

state: latest <-- will install and/or update to latest versionYAML Strings

Valid fomats for a string in YAML:

super string"super string"'super string'

When inserting text into a file, you may have to deal with spacing. You can either preserve newline characters with a pipe | such as:

- name: Using | to preserve newlines

copy:

dest: /tmp/rendezvous-with-death.txt

content: |

I have a rendezvous with Death

At some disputed barricade,

When Spring comes back with rustling shade

And apple-blossoms fill the air—Output:

I have a rendezvous with Death

At some disputed barricade,

When Spring comes back with rustling shade

And apple-blossoms fill the air—Or chose not to with a carrot >

- name: Using > to fold lines into one

copy:

dest: /tmp/rendezvous-with-death.txt

content: >

I have a rendezvous with Death

At some disputed barricade,

When Spring comes back with rustling shade

And apple-blossoms fill the air—Output:

I have a rendezvous with Death At some disputed barricade, When Spring comes back with rustling shade And apple-blossoms fill the air—Checking syntax with --syntax-check

You can use the --syntax-check flag to check a playbook for errors. The ansible-playbook command does check syntax by default though, and will throw the same error messages. The syntax check stops after detecting a single error. So you will need to fix the first errors in order to see errors further in the file. I’ve added a tab in front of the host key to demonstrate:

[ansible@control base]$ cat playbook.yaml

---

- name: install start and enable httpd

hosts: all

tasks:

- name: install package

yum:

name: httpd

state: installed

- name: start and enable service

service:

name: httpd

state: started

enabled: yes

[ansible@control base]$ ansible-playbook --syntax-check playbook.yaml

ERROR! We were unable to read either as JSON nor YAML, these are the errors we got from each:

JSON: Expecting value: line 1 column 1 (char 0)

Syntax Error while loading YAML.

mapping values are not allowed in this context

The error appears to be in '/home/ansible/base/playbook.yaml': line 3, column 10, but may

be elsewhere in the file depending on the exact syntax problem.

The offending line appears to be:

- name: install start and enable httpd

hosts: all

^ hereAnd here it is again, after fixing the syntax error:

[ansible@control base]$ vim playbook.yaml

[ansible@control base]$ cat playbook.yaml

---

- name: install start and enable httpd

hosts: all

tasks:

- name: install package

yum:

name: httpd

state: installed

- name: start and enable service

service:

name: httpd

state: started

enabled: yes

[ansible@control base]$ ansible-playbook --syntax-check playbook.yaml

playbook: playbook.yamlDoing a dry run

Use the -C flag to perform a dry run. This will check the success status of all of the tasks without actually making any changes.

ansible-playbook -C playbook.yaml

Multiple play playbooks

Using multiple plays in a playbook lets you set up one group of servers with one configuration and another group with a different configuration. Each play has it’s own list of hosts to address.

You can also specify different parameters in each play such as become: or the remote_user: parameters.

Try to keep playbooks small. As bigger playbooks will be harder to troubleshoot. You can use include: to include other playbooks. Other than troubleshooting, using smaller playbooks lets you use your playbooks in a flexible way to perform a wider range of tasks.

Here is an example of a playbook with two plays:

---

- name: install start and enable httpd <--- play 1

hosts: all

tasks:

- name: install package

yum:

name: httpd

state: installed

- name: start and enable service

service:

name: httpd

state: started

enabled: yes

- name: test httpd accessibility <-- play 2

hosts: localhost

tasks:

- name: test httpd access

uri:

url: http://ansible1Verbose output options

You can increase the output of verbosity to an amount hitherto undreamt of. This can be useful for troubleshooting.

Verbose output of the playbook above showing task results:

[ansible@control base]$ ansible-playbook -v playbook.yaml

Verbose output of the playbook above showing task results and task configuration:

[ansible@control base]$ ansible-playbook -vv playbook.yaml

Verbose output of the playbook above showing task results, task configuration, and info about connections to managed hosts:

[ansible@control base]$ ansible-playbook -vvv playbook.yaml

Verbose output of the playbook above showing task results, task configuration, and info about connections to managed hosts, plug-ins, user accounts, and executed scripts:

[ansible@control base]$ ansible-playbook -vvvv playbook.yaml

Lab playbook

Now we know enough to create and enable a simple webserver. Here is a playbook example. Just make sure to download the posix collection or you won’t be able to use the firewalld module:

[ansible@control base]$ ansible-galaxy collection install ansible.posix

[ansible@control base]$ cat playbook.yaml

---

- name: Enable web server

hosts: ansible1

tasks:

- name: install package

yum:

name:

- httpd

- firewalld

state: installed

- name: Create welcome page

copy:

content: "Welcome to the webserver!\n"

dest: /var/www/html/index.html

- name: start and enable service

service:

name: httpd

state: started

enabled: yes

- name: enable firewall

service:

name: firewalld

state: started

enabled: true

- name: Open service in firewall

firewalld:

service: http

permanent: true

state: enabled

immediate: yes

- name: test webserver accessibility

hosts: localhost

become: no

tasks:

- name: test webserver access

uri:

url: http://ansible1

return_content: yes <-- Return the body of the response as a content key in the dictionary result

status_code: 200 <--After running this playbook, you should be able to reach the webserver at http://ansible1

With return content and status code

ok: [localhost] => {"accept_ranges": "bytes", "changed": false, "connection": "close", "content": "Welcome to the webserver!\n", "content_length": "26", "content_type": "text/html; charset=UTF-8", "cookies": {}, "cookies_string": "", "date": "Thu, 10 Apr 2025 12:12:37 GMT", "elapsed": 0, "etag": "\"1a-6326b4cfb4042\"", "last_modified": "Thu, 10 Apr 2025 11:58:14 GMT", "msg": "OK (26 bytes)", "redirected": false, "server": "Apache/2.4.62 (Red Hat Enterprise Linux)", "status": 200, "url": "http://ansible1"}Adds this: "content": "Welcome to the webserver!\n" and this: "status": 200, "url": "http://ansible1"} to verbose output for that task.

Ansible Roles

Work with roles and Create roles

Using Ansible Roles

- Ready-to-use playbook-based Ansible solutions that you can easily include in your own playbooks.

- Community roles are provided through Ansible Galaxy

- Also possible to create your own roles.

- Red Hat provides RHEL System Roles.

- Roles make it possible to provide Ansible code in a reusable way.

- You can easily define a specific task in a role, and after defining it in a role, you can easily redistribute that and ensure that tasks are handled the same way, no matter where they are executed.

- Roles can be custom-made for specific environments, or default roles provided from Ansible Galaxy can be used.

Understanding Ansible Roles

- work with include files.

- All the different components that you may use in a playbook are used in roles and stored in separate directories.

- While defining the role, you don’t need to tell the role that it should look in some of these specific directories; it does that automatically.

- The only thing you need to do is tell your Ansible playbook that it should include a role.

- Different components of the role are stored in different subdirectories.

Roles Sample Directory Structure:

[ansible@control roles]$ tree testrole/

testrole/

|-- defaults

| `-- main.yml

|-- files

|-- handlers

| `-- main.yml

|-- meta

| `-- main.yml

|-- README.md

|-- tasks

| `-- main.yml

|-- templates

|-- tests

| |-- inventory

| `-- test.yml

`-- vars

`-- main.ymlRole Directory Structure defaults

-

Default variables that may be overwritten by other variables files

-

Static files that are needed by role tasks handlers

-

Handlers for use in this role meta

-

metadata, such as dependencies, plus license and maintainer information tasks

-

Role task definitions templates

-

Jinja2 templates tests

-

Optional inventory and a test.yml file to test the role vars

-

Variables that are not meant to be overwritten

-

Most of the role directories have a main.yml file.

-

This is the entry-point YAML file that is used to define components in the role.

Understanding Role Location

Roles can be stored in different locations:

./roles

- store roles in the current project directory.

- highest precedence.

~/.ansible/roles

- exists in the current user home directory and makes the role available to the current user only.

- second-highest precedence.

/etc/ansible/roles

- Where roles are stored to make them accessible to any user.

/usr/share/ansible/roles

- Where roles are stored after they are installed from RPM files.

- lowest precedence

- should not be used for storing custom-made roles.

ansible-galaxy init { newrolename }

- create a custom role

- creates the default role directory structure with a main.yml file

- includes sample files

Using Roles from Playbooks

- Call roles in a playbook the same way you call a task

- Roles are included as a list.

---

- name: include some roles

roles:

- role1

- role2- Roles are executed before the tasks.

- In specific cases you might have to execute tasks before the roles. To do so, you can specify these tasks in a pre_tasks section.

- Also, it’s possible to use the post_tasks section to include tasks that will be executed after the roles, but also after tasks specified in the playbook as well as the handlers they call.

Creating Custom Roles

- Use

mkdir rolesto create a roles subdirectory in the current directory, and usecd rolesto get into that subdirectory. - Use

ansible-galaxy init motdto create the motd role structure. - Add contents to motd/tasks/main.yml

- Add contents to motd/templates/motd.j2

- Add contents to motd/defaults/main.yml

- Add contents to motd/meta/main.yml

- Create the playbook exercise91.yaml to run the role

- Run the playbook by using

ansible-playbook exercise91.yaml - Verify that modifications have been applied correctly by using the ad hoc command

ansible ansible2 -a "cat /etc/motd"

Sample role all under roles/motd/:

defaults/main.yml

---

# defaults file for motd

system_manager: anna@example.commeta/main.yml

galaxy_info:

author: Sander van V

description: your description

company: your company (optional)

license: license (GPLv2, CC-BY, etc)

min_ansible_version: 2.5tasks/main.yml

---

tasks file for motd

- name: copy motd file

template:

src: templates/motd.j2

dest: /etc/motd

owner: root

group: root

mode: 0444templates/motd.j2

Welcome to {{ ansible_hostname }}

This file was created on {{ ansible_date_time.date }}

Disconnect if you have no business being here

Contact {{ system_manager }} if anything is wrongPlaybook motd.yml:

---

- name: use the motd role playbook

hosts: ansible2

roles:

- role: motd

system_manager: bob@example.comhandlers/main.yml example:

---

# handlers file for base-config

- name: source profile

command: source /etc/profile

- name: source bash

command: source /etc/bash.bashrc Managing Role Dependencies

- Roles may use other roles as a dependency.

- You can put role dependencies in meta/main.yml

- Dependent roles are always executed before the roles that depend on them.

- Dependent roles are executed once.

- When two roles that are used in a playbook call the same dependency, the dependent role is executed once only.

- When calling dependent roles, it is possible to pass variables to the dependent role.

- You can define a when statement to ensure that the dependent role is executed only in specific situations.

Defining dependencies in meta/main.yml

dependencies:

- role: apache

port: 8080

- role: mariabd

when: environment == ’production’Understanding File Organization Best Practices

-

Working with roles splits the contents of the role off the tasks that are run through the playbook.

-

Splitting files to store them in a location that makes sense is common in Ansible

-

When you’re working with Ansible, it’s a good idea to work with project directories in bigger environments.

-

Working with project directories makes it easier to delegate tasks and have the right people responsible for the right things.

-

Each project directory may have its own ansible.cfg file, inventory file, and playbooks.

-

If the project grows bigger, variable files and other include files may be used, and they are normally stored in subdirectories.

-

At the top-level directory, create the main playbook from which other playbooks are included. The suggested name for the main playbook is site.yml.

-

Use group_vars/ and host_vars/ to set host-related variables and do not define them in inventory.

-

Consider using different inventory files to differentiate between production and staging phases.

-

Use roles to standardize common tasks.

When you are working with roles, some additional recommendations apply:

-

Use a version control repository to maintain roles in a consistent way. Git is commonly used for this purpose.

-

Sensitive information should never be included in roles. Use Ansible Vault to store sensitive information in an encrypted way.

-

Use

ansible-galaxy initto create the role base structure. Remove files and directories you don’t use. -

Don’t forget to provide additional information in the role’s README.md and meta/main.yml files.

-

Keep roles focused on a specific function. It is better to use multiple roles to perform multiple tasks.

-

Try to develop roles in a generic way, such that they can be used for multiple purposes.

Lab 9-1

Create a playbook that starts the Nginx web server on ansible1, according to the following requirements: • A requirements file must be used to install the Nginx web server. Do NOT use the latest version of the Galaxy role, but instead use the version before that. • The same requirements file must also be used to install the latest version of postgresql. • The playbook needs to ensure that neither httpd nor mysql is currently installed.

Lab 9-2

Use the RHEL SELinux System Role to manage SELinux properties according to the following requirements:

• A Boolean is set to allow SELinux relabeling to be automated using

cron.

• The directory /var/ftp/uploads is created, permissions are set to 777,

and the context label is set to public_content_rw_t.

• SELinux should allow web servers to use port 82 instead of port 80.

• SELinux is in enforcing state.

Subjects:

ansible-playbook timesync.yaml to run the playbook. Observe its output. Notice that some messages in red are shown, but these can safely be ignored.

5. Use ansible ansible2 -a "timedatectl show" and notice that the timezone variable is set to UTC.

Lab 9-1

Create a playbook that starts the Nginx web server on ansible1, according to the following requirements:

• A requirements file must be used to install the Nginx web server. Do NOT use the latest version of the Galaxy role, but instead use the version before that.

• The same requirements file must also be used to install the latest version of postgresql.

ansible-galaxy install -r roles/requirements.yml

cat roles/requirements.yml

- src: geerlingguy.nginx

version: "3.1.4"

- src: geerlingguy.postgresql• The playbook needs to ensure that neither httpd nor mysql is currently installed.

---

- name: ensure conflicting packages are not installed

hosts: web1

tasks:

- name: remove packages

yum:

name:

- mysql

- httpd

state: absent

- name: nginx web server

hosts: web1

roles:

- geerlingguy.nginx

- geerlingguy.postgresql(Had to add a variable file for redhat 10 into the role. )

Lab 9-2

Use the RHEL SELinux System Role to manage SELinux properties according to the following requirements:

• A Boolean is set to allow SELinux relabeling to be automated using cron. • The directory /var/ftp/uploads is created, permissions are set to 777, and the context label is set to public_content_rw_t. • SELinux should allow web servers to use port 82 instead of port 80. • SELinux is in enforcing state.

vim lab92.yml

---

- name: manage ftp selinux properties

hosts: ftp1

vars:

selinux_booleans:

- name: cron_can_relabel

state: true

persistent: true

selinux_state: enforcing

selinux_ports:

- ports: 82

proto: tcp

setype: http_port_t

state: present

local: true

tasks:

- name: create /var/ftp/uploads/

file:

path: /var/ftp/uploads

state: directory

mode: 777

- name: set selinux context

sefcontext:

target: '/var/ftp/uploads(/.*)?'

setype: public_content_rw_t

ftype: d

state: present

notify: run restorecon

- name: Execute the role and reboot in a rescue block

block:

- name: Include selinux role

include_role:

name: rhel-system-roles.selinux

rescue:

- name: >-

Fail if failed for a different reason than selinux_reboot_required

fail:

msg: "role failed"

when: not selinux_reboot_required

- name: Restart managed host

reboot:

- name: Wait for managed host to come back

wait_for_connection:

delay: 10

timeout: 300

- name: Reapply the role

include_role:

name: rhel-system-roles.selinux

handlers:

- name: run restorecon

command: restorecon -v /var/ftp/uploadsAnsible Vault

Ansible Vault

- For webkeys, passwords, and other types of sensitive data that you really shouldn’t store as plain text in a playbook.

- Can use Ansible Vault to encrypt and decrypt sensitive data to make it unreadable, and only while accessing data does it ask for a password so that it is decrypted.

1. Sensitive data is stored as values in variables in a separate variable file. 2. The variable file is encrypted, using the ansible-vault command. 3. While accessing the variable file from a playbook, you enter a password to decrypt.

Managing Encrypted Files

ansible-vault create secret.yaml

- Ansible Vault prompts for a password and then opens the file using the default editor.

- The password can be provided in a password file.(must be really well protected (for example, by putting it in the user root home directory))

- If a password file is used, the encrypted variable file can be created using

ansible-vault create \--vault-password-file=passfile secret.yaml

ansible-vault encrypt

- encrypt one or more existing files.

- The encrypted file can next be used from a playbook, where a password needs to be entered to decrypt.

ansible-vault decrypt

- used to decrypt the file.

Commonly used ansible-vault commands:

create

- Creates new encrypted file

encrypt - Encrypts an existing file

encrypt_string - Encrypts a string

decrypt - Decrypts an existing file

rekey - Changes password on an existing file

view - Shows contents of an existing file

edit - Edits an existing encrypted file

Using Vault in Playbooks

--vault-id @prompt

- When a Vault-encrypted file is accessed from a playbook, a password must be entered.

- Has the

ansible-playbookcommand prompt for a password for each of the Vault-encrypted files that may be used - Enables a playbook to work with multiple Vault-encrypted files where these files are allowed to have different passwords set.

ansible-playbook --ask-vault-pass

- Used if all Vault-encrypted files a playbook refers to have the same password set.

ansible-playbook --vault-password-file=secret

- Obtain the Vault password from a password file.

- Password file should contain a string that is stored as a single line in the file.

- Make sure the vault password file is protected through file permissions, such that it is not accessible by unauthorized users!

Managing Files with Sensitive Variables

-

You should separate files containing unencrypted variables from files that contain encrypted variables.

-

Use group_vars and host_vars variable inclusion for this.

-

You may create a directory (instead of a file) with the name of the host or host group.

-

Within that directory you can create a file with the name vars, which contains unencrypted variables, and a file with the name vault, which contains Vault-encrypted variables.

-

Vault-encrypted variables can be included from a file using the

vars_filesparameter.

Lab: Working with Ansible Vault

1. Create a secret file containing encrypted values for a variable user and a variable password by using ansible-vault create secrets.yaml

Set the password to password and enter the following lines:

username: bob

pwhash: passwordWhen creating users, you cannot provide the password in plain text; it needs to be provided as a hashed value. Because this exercise focuses on the use of Vault, the password is not provided as a hashed value, and as a result, a warning is displayed. You may ignore this warning.

2. Create the file create-users.yaml and provide the following contents:

---

- name: create a user with vaulted variables

hosts: ansible1

vars_files:

- secrets.yaml

tasks:

- name: creating user

user:

name: "{{ username }}"

password: "{{ pwhash }}"3. Run the playbook by using ansible-playbook --ask-vault-pass create-users.yaml

4. Change the current password on secrets.yaml by using ansible-vault rekey secrets.yaml and set the new password to

secretpassword.

5. To automate the process of entering the password, use echo secretpassword > vault-pass

6. Use chmod 400 vault-pass to ensure the file is readable for the ansible user only; this is about as much as you can do to secure the file.

7. Verify that it’s working by using ansible-playbook --vault-password-file=vault-pass create-users.yaml

JunctionScallopPoise

Ansible-inventory command

Inventory commands:

To view the inventory, specify the inventory file such as ~/base/inventory in the command line. You can name the inventory file anything you want. You can also set the default in the ansible.cfg file.

View the current inventory:

ansible -i inventory <pattern> --list-hosts

List inventory hosts in JSON format:

ansible-inventory -i inventory --list

Display overview of hosts as a graph:

ansible-inventory -i inventory --graph

In our lab example:

[ansible@control base]$ pwd

/home/ansible/base

[ansible@control base]$ ls

inventory

[ansible@control base]$ cat inventory

ansible1

ansible2

[web]

web1

web2

[ansible@control base]$ ansible-inventory -i inventory --graph

@all:

|--@ungrouped:

| |--ansible1

| |--ansible2

|--@web:

| |--web1

| |--web2

[ansible@control base]$ ansible-inventory -i inventory --list

{

"_meta": {

"hostvars": {}

},

"all": {

"children": [

"ungrouped",

"web"

]

},

"ungrouped": {

"hosts": [

"ansible1",

"ansible2"

]

},

"web": {

"hosts": [

"web1",

"web2"

]

}

}

[ansible@control base]$ ansible -i inventory all --list-hosts

hosts (4):

ansible1

ansible2

web1

web2

[ansible@control base]$ ansible -i inventory ungrouped --list-hosts

hosts (2):

ansible1

ansible2Using the ansible-inventory Command

- default output of a dynamic inventory script is unformatted.

- To show formatted JSON output of the scripts, you can use the

ansible-inventorycommand. - Apart from the

--listand--hostoptions, this command also uses the--graphoption to show a list of hosts, including the host groups they are a member of.

[ansible@control rhce8-book]$ ansible-inventory -i listing101.py --graph

[WARNING]: A duplicate localhost-like entry was found (localhost). First found

localhost was 127.0.0.1

@all:

|--@ungrouped:

| |--127.0.0.1

| |--192.168.4.200

| |--192.168.4.201

| |--192.168.4.202

| |--ansible1

| |--ansible1.example.com

| |--ansible2

| |--ansible2.example.com

| |--control

| |--control.example.com

| |--localhost

| |--localhost.localdomain

| |--localhost4

| |--localhost4.localdomain4

| |--localhost6

| |--localhost6.localdomain6Ansible.cfg

ansible.cfg

You can store this in a project’s directory or a user’s home directory, in the case that multiple user’s want to have their own Ansible configuration. Or in /etc/ansible if the configuration will be the same for every user and every project. You can also specify these settings in Ansible playbooks. The settings in a playbook take precedence over the .cfg file.

ansible.cfg precedence (Ansible uses the first one it finds and ignores the rest.)

- ANSIBLE_CONFIG environment variable

- ansible.cfg in current directory

- ~/.ansible.cfg

- /etc/ansible/ansible.cfg

Generate an example config file in the current directory. All directive are commented out by default:

[ansible@control base]$ ansible-config init --disabled > ansible.cfg

Include existing plugin to the file:

ansible-config init --disabled -t all > ansible.cfg

This generates an extremely large file. So I’ll just show Van Vugt’s example in .ini format:

[defaults] <-- General information

remote_user = ansible <--Required

host_key_checking = false <-- Disable SSH host key validity check

inventory = inventory

[privilege_escalation] <-- Define how ansible user requires admin rights to connect to hosts

become = True <-- Escalation required

become_method = sudo

become_user = root <-- Escalated user

become_ask_pass = False <-- Do not ask for escalation passwordPrivilege escalation parameters can be specified in ansible.cfg, playbooks, and on the command line.

Boot Process

Managing the Boot Process

No modules for managing boot process.

file module

- manage the systemd boot targets

lineinfile module

- manage the GRUB configuration.

reboot module

- enables you to reboot a host and pick up after the reboot at the exact same location.

Managing Systemd Targets

To manage the default systemd target:

- /etc/systemd/system/default.target file must exist as a symbolic link to the desired default target.

ls -l /etc/systemd/system/default.target

lrwxrwxrwx. 1 root root 37 Mar 23 05:33 /etc/systemd/system/default.target -> /lib/systemd/system/multi-user.target---

- name: set default boot target

hosts: ansible2

tasks:

- name: set boot target to graphical

file:

src: /usr/lib/systemd/system/graphical.target

dest: /etc/systemd/system/default.target

state: linkRebooting Managed Hosts

reboot module.

- Restart managed nodes.

test_command argument

- Verify the renewed availability of the managed hosts

- Specifies an arbitrary command that Ansible should run successfully on the managed hosts after the reboot. The success of this command indicates that the rebooted host is available again.

Equally useful while using the reboot module are the arguments that relate to timeouts. The reboot module uses no fewer than four of them:

• connect_timeout: The maximum seconds to wait for a successful connection before trying again

• post_reboot_delay: The number of seconds to wait after the reboot command before trying to validate the managed host is available again

• pre_reboot_delay: The number of seconds to wait before actually issuing the reboot

• reboot_timeout: The maximum seconds to wait for the rebooted machine to respond to the test command

When the rebooted host is back, the current playbook continues its tasks. This scenario is shown in the example in Listing 14-7, where first all managed hosts are rebooted, and after a successful reboot is issued, the message “successfully rebooted” is shown. Listing 14-8 shows the result of running this playbook. In Exercise 14-2 you can practice rebooting hosts using the reboot module.

Listing 14-7 Rebooting Managed Hosts

::: pre_1 — - name: reboot all hosts hosts: all gather_facts: no tasks: - name: reboot hosts reboot: msg: reboot initiated by Ansible test_command: whoami - name: print message to show host is back debug: msg: successfully rebooted :::

Listing 14-8 Verifying the Success of the reboot Module

::: pre_1 [ansible@control rhce8-book]$ ansible-playbook listing147.yaml

PLAY [reboot all hosts] *************************************************************************************************

TASK [reboot hosts] *****************************************************************************************************

changed: [ansible2]

changed: [ansible1]

changed: [ansible3]

changed: [ansible4]

changed: [ansible5]

TASK [print message to show host is back] *******************************************************************************

ok: [ansible1] => {

"msg": "successfully rebooted"

}

ok: [ansible2] => {

"msg": "successfully rebooted"

}

ok: [ansible3] => {

"msg": "successfully rebooted"

}

ok: [ansible4] => {

"msg": "successfully rebooted"

}

ok: [ansible5] => {

"msg": "successfully rebooted"

}

PLAY RECAP **************************************************************************************************************

ansible1 : ok=2 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

ansible2 : ok=2 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

ansible3 : ok=2 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

ansible4 : ok=2 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

ansible5 : ok=2 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

:::

::: box Exercise 14-2 Managing Boot State

1. As a preparation for this playbook, so that it actually changes the default boot target on the managed host, use ansible ansible2 -m file -a “state=link src=/usr/lib/systemd/system/graphical.target dest=/etc/systemd/system/default.target”.

2. Use your editor to create the file exercise142.yaml and write the following playbook header:

---

- name: set default boot target and reboot

hosts: ansible2

tasks:3. Now you set the default boot target to multi-user.target. Add the following task to do so:

- name: set default boot target

file:

src: /usr/lib/systemd/system/multi-user.target

dest: /etc/systemd/system/default.target

state: link4. Complete the playbook to reboot the managed hosts by including the following tasks:

- name: reboot hosts

reboot:

msg: reboot initiated by Ansible

test_command: whoami

- name: print message to show host is back

debug:

msg: successfully rebooted5. Run the playbook by using ansible-playbook exercise142.yaml.

6. Test that the reboot was issued successfully by using ansible ansible2 -a “systemctl get-default”. :::

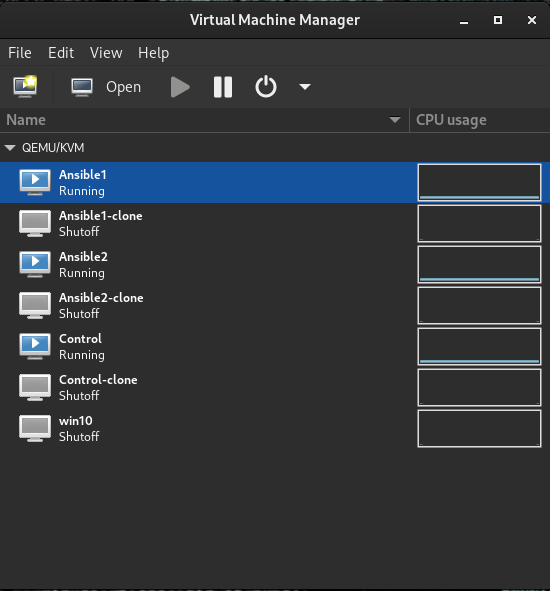

Building an Ansible lab with Ansible

When I started studying for RHCE, the study guide had me manually set up virtual machines for the Ansible lab environment. I thought.. Why not start my automation journey right, and automate them using Vagrant.

I use Libvirt to manage KVM/QEMU Virtual Machines and the Virt-Manager app to set them up. I figured I could use Vagrant to automatically build this lab from a file. And I got part of the way. I ended up with this Vagrant file:

Vagrant.configure("2") do |config|

config.vm.box = "almalinux/9"

config.vm.provider :libvirt do |libvirt|

libvirt.uri = "qemu:///system"

libvirt.cpus = 2

libvirt.memory = 2048

end

config.vm.define "control" do |control|

control.vm.network "private_network", ip: "192.168.124.200"

control.vm.hostname = "control.example.com"

end

config.vm.define "ansible1" do |ansible1|

ansible1.vm.network "private_network", ip: "192.168.124.201"

ansible1.vm.hostname = "ansible1.example.com"

end

config.vm.define "ansible2" do |ansible2|

ansible2.vm.network "private_network", ip: "192.168.124.202"

ansible2.vm.hostname = "ansible2.example.com"

end

endI could run this Vagrant file and Build and destroy the lab in seconds. But there was a problem. The Libvirt plugin, or Vagrant itself, I’m not sure which, kept me from doing a couple important things.

First, I could not specify the initial disk creation size. I could add additional disks of varying sizes but, if I wanted to change the size of the first disk, I would have to go back in after the fact and expand it manually…

Second, the Libvirt plugin networking settings were a bit confusing. When you add the private network option as seen in the Vagrant file, it would add this as a secondary connection, and route everything through a different public connection.

Now I couldn’t get the VMs to run using the public connection for whatever reason, and it seems the only workaround was to make DHCP reservations for the guests Mac addresses which gave me even more problems to solve. But I won’t go there..

So why not get my feet wet and learn how to deploy VMs with Ansible? This way, I would get the granularity and control that Ansible gives me, some extra practice with Ansible, and not having to use software that has just enough abstraction to get in the way.

The guide I followed to set this up can be found on Redhat’s blog here. And it was pretty easy to set up all things considered.

I’ll rehash the steps here:

- Download a cloud image

- Customize the image

- Install and start a VM

- Access the VM

Creating the role

Move to roles directory

cd roles

Initialize the role

ansible-galaxy role init kvm_provision

Switch into the role directory

cd kvm_provision/

Remove unused directories

rm -r files handlers vars

Define variables

Add default variables to main.yml

cd defaults/ && vim main.yml

---

# defaults file for kvm_provision

base_image_name: AlmaLinux-9-GenericCloud-9.5-20241120.x86_64.qcow2

base_image_url: https://repo.almalinux.org/almalinux/9/cloud/x86_64/images/{{ base_image_name }}

base_image_sha: abddf01589d46c841f718cec239392924a03b34c4fe84929af5d543c50e37e37

libvirt_pool_dir: "/var/lib/libvirt/images"

vm_name: f34-dev

vm_vcpus: 2

vm_ram_mb: 2048

vm_net: default

vm_root_pass: test123

cleanup_tmp: no

ssh_key: /root/.ssh/id_rsa.pub

# Added option to configure ip address

ip_addr: 192.168.124.250

gw_addr: 192.168.124.1

# Added option to configure disk size

vm_disksize: 20Defining a VM template

The community.libvirt.virt module is used to provision a KVM VM. This module uses a VM definition in XML format with libvirt syntax. You can dump a VM definition of a current VM and then convert it to a template from there. Or you can just use this:

cd templates/ && vim vm-template.xml.j2

<domain type='kvm'>

<name>{{ vm_name }}</name>

<memory unit='MiB'>{{ vm_ram_mb }}</memory>

<vcpu placement='static'>{{ vm_vcpus }}</vcpu>

<os>

<type arch='x86_64' machine='pc-q35-5.2'>hvm</type>

<boot dev='hd'/>

</os>

<cpu mode='host-model' check='none'/>

<devices>

<emulator>/usr/bin/qemu-system-x86_64</emulator>

<disk type='file' device='disk'>

<driver name='qemu' type='qcow2'/>

<source file='{{ libvirt_pool_dir }}/{{ vm_name }}.qcow2'/>

<target dev='vda' bus='virtio'/>

<address type='pci' domain='0x0000' bus='0x05' slot='0x00' function='0x0'/>

<!-- Added: Specify the disk size using a variable -->

<size unit='GiB'>{{ disk_size }}</size>

</disk>

<interface type='network'>

<source network='{{ vm_net }}'/>

<model type='virtio'/>

<address type='pci' domain='0x0000' bus='0x01' slot='0x00' function='0x0'/>

</interface>

<channel type='unix'>

<target type='virtio' name='org.qemu.guest_agent.0'/>

<address type='virtio-serial' controller='0' bus='0' port='1'/>

</channel>

<channel type='spicevmc'>

<target type='virtio' name='com.redhat.spice.0'/>

<address type='virtio-serial' controller='0' bus='0' port='2'/>

</channel>

<input type='tablet' bus='usb'>

<address type='usb' bus='0' port='1'/>

</input>

<input type='mouse' bus='ps2'/>

<input type='keyboard' bus='ps2'/>

<graphics type='spice' autoport='yes'>

<listen type='address'/>

<image compression='off'/>

</graphics>

<video>

<model type='qxl' ram='65536' vram='65536' vgamem='16384' heads='1' primary='yes'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x0'/>

</video>

<memballoon model='virtio'>

<address type='pci' domain='0x0000' bus='0x06' slot='0x00' function='0x0'/>

</memballoon>

<rng model='virtio'>

<backend model='random'>/dev/urandom</backend>

<address type='pci' domain='0x0000' bus='0x07' slot='0x00' function='0x0'/>

</rng>

</devices>

</domain>The template uses some of the variables from earlier. This allows flexibility to changes things by just changing the variables.

Define tasks for the role to perform

cd ../tasks/ && vim main.yml

---

# tasks file for kvm_provision

# ensure the required package dependencies `guestfs-tools` and `python3-libvirt` are installed. This role requires these packages to connect to `libvirt` and to customize the virtual image in a later step. These package names work on Fedora Linux. If you're using RHEL 8 or CentOS, use `libguestfs-tools` instead of `guestfs-tools`. For other distributions, adjust accordingly.

- name: Ensure requirements in place

package:

name:

- guestfs-tools

- python3-libvirt

state: present

become: yes

# obtain a list of existing VMs so that you don't overwrite an existing VM on accident. uses the `virt` module from the collection `community.libvirt`, which interacts with a running instance of KVM with `libvirt`. It obtains the list of VMs by specifying the parameter `command: list_vms` and saves the results in a variable `existing_vms`. `changed_when: no` for this task to ensure that it's not marked as changed in the playbook results. This task doesn't make any change in the machine; it only checks the existing VMs. This is a good practice when developing Ansible automation to prevent false reports of changes.

- name: Get VMs list

community.libvirt.virt:

command: list_vms

register: existing_vms

changed_when: no

#execute only when the VM name the user provides doesn't exist. And uses the module `get_url` to download the base cloud image into the `/tmp` directory

- name: Create VM if not exists

block:

- name: Download base image

get_url:

url: "{{ base_image_url }}"

dest: "/tmp/{{ base_image_name }}"

checksum: "sha256:{{ base_image_sha }}"

# copy the file to libvirt's pool directory so we don't edit the original, which can be used to provision other VMS later

- name: Copy base image to libvirt directory

copy:

dest: "{{ libvirt_pool_dir }}/{{ vm_name }}.qcow2"

src: "/tmp/{{ base_image_name }}"

force: no

remote_src: yes

mode: 0660

register: copy_results

-

# Resize the VM disk

- name: Resize VM disk

command: qemu-img resize "{{ libvirt_pool_dir }}/{{ vm_name }}.qcow2" "{{ disk_size }}G"

when: copy_results is changed

# uses command module to run virt-customize to customize the image

- name: Configure the image

command: |

virt-customize -a {{ libvirt_pool_dir }}/{{ vm_name }}.qcow2 \

--hostname {{ vm_name }} \

--root-password password:{{ vm_root_pass }} \

--ssh-inject 'root:file:{{ ssh_key }}' \

--uninstall cloud-init --selinux-relabel

# Added option to configure an IP address

--firstboot-command "nmcli c m eth0 con-name eth0 ip4 {{ ip_addr }}/24 gw4 {{ gw_addr }} ipv4.method manual && nmcli c d eth0 && nmcli c u eth0"

when: copy_results is changed

- name: Define vm

community.libvirt.virt:

command: define

xml: "{{ lookup('template', 'vm-template.xml.j2') }}"

when: "vm_name not in existing_vms.list_vms"

- name: Ensure VM is started

community.libvirt.virt:

name: "{{ vm_name }}"

state: running

register: vm_start_results

until: "vm_start_results is success"

retries: 15

delay: 2

- name: Ensure temporary file is deleted

file:

path: "/tmp/{{ base_image_name }}"

state: absent

when: cleanup_tmp | boolChanged my user to own the libvirt directory:

chown -R david:david /var/lib/libvirt/images

Create playbook kvm_provision.yaml

---

- name: Deploys VM based on cloud image

hosts: localhost

gather_facts: yes

become: yes

vars:

pool_dir: "/var/lib/libvirt/images"

vm: control

vcpus: 2

ram_mb: 2048

cleanup: no

net: default

ssh_pub_key: "/home/davidt/.ssh/id_ed25519.pub"

disksize: 20

tasks:

- name: KVM Provision role

include_role:

name: kvm_provision

vars:

libvirt_pool_dir: "{{ pool_dir }}"

vm_name: "{{ vm }}"

vm_vcpus: "{{ vcpus }}"

vm_ram_mb: "{{ ram_mb }}"

vm_net: "{{ net }}"

cleanup_tmp: "{{ cleanup }}"

ssh_key: "{{ ssh_pub_key }}"Add the libvirt collection

ansible-galaxy collection install community.libvirt

Create a VM with a new name

ansible-playbook -K kvm_provision.yaml -e vm=ansible1

–run-command ’nmcli c a type Ethernet ifname eth0 con-name eth0 ip4 192.168.124.200 gw4 192.168.124.1'

parted /dev/vda resizepargit t 4 100%

Warning: Partition /dev/vda4 is being used. Are you sure you want to continue?

Yes/No? y

Information: You may need to update /etc/fstab.

lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS vda 252:0 0 20G 0 disk ├─vda2 252:2 0 200M 0 part /boot/efi ├─vda3 252:3 0 1G 0 part /boot └─vda4 252:4 0 8.8G 0 part /

variables {{ ansible_user }} {{ ansible_password }} {{ gw_addr }} {{ ip_addr }}

; useradd -m -p {{ ansible_user }} ; chage -d 0 {{ ansible_user }} ; cat {{ ansible_password }} > passwd {{ ansible_user }} –stdin" \

- name: Configure the image

command: |

virt-customize -a {{ libvirt_pool_dir }}/{{ vm_name }}.qcow2 \

--hostname {{ vm_name }} \

--root-password password:{{ vm_root_pass }} \

--uninstall cloud-init --selinux-relabel \

--firstboot-command "nmcli c m eth0 con-name eth0 ip4 \

{{ ip_addr }}/24 gw4 {{ gw_addr }} \

ipv4.method manual && nmcli c d eth0 \

&& nmcli c u eth0 && adduser \

{{ ansible_user }} && echo \

"{{ ansible_password }}" | passwd \

--stdin {{ ansible_user }}"

when: copy_results is changed

- name: Add ssh keys

command: |

virt-customize -a {{ libvirt_pool_dir }}/{{ vm_name }}.qcow2 \

--ssh-inject '{{ ansible_user }}:file:{{ ssh_key }}'Common modules with examples

uri: Interacts with basic http and https web services. (Verify connectivity to a web server +9)

Test httpd accessibility:

uri:

url: http://ansible1Show result of the command while running the playbook:

uri:

url: http://ansible1

return_content: yesShow the status code that signifies the success of the request:

uri:

url: http://ansible1

status_code: 200debug: Prints statements during execution. Used for debugging variables or expressions without stopping a playbook.

Print out the value of the ansible_facts variable:

debug:

var: ansible_factsDeploying files

This chapter covers the following subjects:

• Using Modules to Manipulate Files • Managing SELinux Properties • Using Jinja2 Templates

RHCE exam topics

• Use Ansible modules for system administration tasks that work with: • File contents • Use advanced Ansible features • Create and use templates to create customized configuration files

Using Modules to Manipulate Files

File Module Manipulation Overview

Common modules to manipulate files copy

- Copies files to remote locations fetch

- Fetches files from remote locations file

- Manage file and file properties

- Create new files or directories

- Create links

- Remove files

- Set permissions and ownership

acl

- Work with file system ACLs find

- Find files based on properties lineinfile

- Manages lines in text files blockinfile

- Manage blocks in text files replace

- Replaces strings in text files based on regex synchronize

- Performs rsync-based synchronization tasks stat

- Retrieves file or file system status

- enables you to retrieve file status information.

- gets status information and is not used to change anything

- use it to check specific file and perform an action if the properties are not set as expected. Shows:

- which permission mode is set,

- whether it is a link,

- which checksum is set on the file

- etc.

- See

ansible-doc statfor list of full output

Lab: View information about /etc/hosts file

- name: stat module tests

hosts: ansible1

tasks:

- stat:

path: /etc/hosts

register: st

- name: show current values

debug:

msg: current value of the st variable is {{ st }}Lab: write a message if the expected permission mode is not set.

---

- name: stat module test

hosts: ansible1

tasks:

- command: touch /tmp/statfile

- stat:

path: /tmp/statfile

register: st

- name: show current values

debug:

msg: current value of the st variable is {{ st }}

- fail:

msg: "unexpected file mode, should be set to 0640"

when: st.stat.mode != '0640' Lab: Use the file Module to Correct File Properties Discovered with stat

---

- name: stat module tests

hosts: ansible1

tasks:

- command: touch /tmp/statfile

- stat:

path: /tmp/statfile

register: st

- name: show current values

debug:

msg: current value of the st variable is {{ st }}

- name: changing file permissions if that's needed

file:

path: /tmp/statfile

mode: 0640

when: st.stat.mode != '0640'Managing File Contents

Use lineinfile or blockinfile instead of copy to manage text in a file

Lab: Change a string, based on a regular expression.

---

- name: configuring SSH

hosts: all

tasks:

- name: disable root SSH login

lineinfile:

dest: /etc/ssh/sshd_config

regexp: "^PermitRootLogin"

line: "PermitRootLogin no"

notify: restart sshd

handlers:

- name: restart sshd

service:

name: sshd

state: restartedLab: Manipulate multiple lines

---

- name: modifying file

hosts: all

tasks:

- name: ensure /tmp/hosts exists

file:

path: /tmp/hosts

state: touch

- name: add some lines to /tmp/hosts

blockinfile:

path: /tmp/hosts

block: |

192.168.4.110 host1.example.com

192.168.4.120 host2.example.com

state: presentWhen blockinfile is used, the text specified in the block is copied with a start and end indicator.

[ansible@ansible1 ~]$ cat /tmp/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.122.201 ansible1

192.168.122.202 ansible2

192.168.122.203 ansible3

# BEGIN ANSIBLE MANAGED BLOCK

192.168.4.110 host1.example.com

192.168.4.120 host2.example.com

# END ANSIBLE MANAGED BLOCKLab: Creating and Removing Files

Use the file module to create a new directory and in that directory create an empty file, then remove the directory recursively.

---

- name: using the file module

hosts: ansible1

tasks:

- name: create directory

file:

path: /newdir

owner: ansible

group: ansible

mode: 770

state: directory

- name: create file in that directory

file:

path: /newdir/newfile

state: touch

- name: show the new file

stat:

path: /newdir/newfile

register: result

- debug:

msg: |

This shows that newfile was created

"{{ result }}"

- name: removing everything again

file:

path: /newdir